This is also called the “true negative rate.”

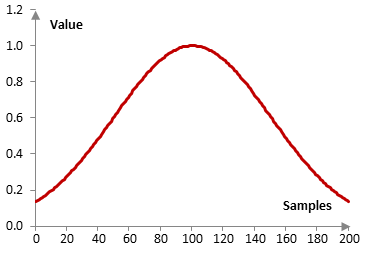

#GAUSSIAN WINDOW FUNCTIIN IN INMR SERIES#

This series is an excellent resource! It gave me a good contextual, higher-level, bird eye view of Gaussian Process from the mathematical perspective.Here is what is basically a note on the tutorial. This is exactly what I need AFTER I understand what kind of thing Gaussian Process is. It gives excellent intuitive insight in how Gaussian Process is working under the hood. A tutorial by Richard Turner, who is an expert in the topic.Very good for those who are mathematically inclined.

This is from a course taught in 2013 at UBC by Nando de Freitas.Below are the resources to get you further. Now, you’re ready to get into the details of how Gaussian Process actually work. This is why the most standard definition is:Ī Gaussian process is a probability distribution over possible functions that fit a set of points.īy now, you should have a feel of what kind of thing Gaussian process is, and how it is related with the typical ML framework. If we were to consider that there is a certain level of noise in our datasets, instead of simply giving us an infinite set of functions, Gaussian process could attach a probability value over each function. Gaussian process comes with a lot of additional features. This issue has given rise to many variants of Gaussian process.) (Unfortunately, since we are not reducing to parameters, we need to calculate through the entire datasets whenever we want to make predictions. Moreover, we could also see the distribution of these functions. Next, we for every point in the feature space x, we could take the average value of the infinitely many function, and use it as our prediction. What we have left, are all the function, still infinitely many, that do fit our dataset. Instead, we use our dataset to eliminate all the functions that don’t fit our dataset. Once we have the function generator, we don’t use any kind of gradient descent to nail on a set of parameters and end up with one function. Here is the most interesting bit about Gaussian Process. ĭepending on which kernel functions are chosen, the inferred prediction could be different as demonstrated here (check this page for more explanations ): Different kernel functions on the same datapoints, leading to different predictions.

0 kommentar(er)

0 kommentar(er)